AI and Accessibility: A New Era of Inclusion

Artificial Intelligence (AI) is becoming a catalyst for change across countless industries — but one of its most profound impacts is unfolding in the world of digital accessibility. The relationship between AI and accessibility represents a new era where inclusive technologies are not just supplementary but foundational to how we design and experience digital environments.

To better understand this transformation, here are several ways AI is currently enhancing accessibility for users with different types of impairments:

- Natural language processing. Enables real-time speech-to-text captioning and voice-based commands for users with hearing or mobility limitations.

- Computer vision. Identifies and interprets visual content to generate meaningful image descriptions for blind or low-vision individuals.

- Predictive assistance. Anticipates user needs, helping those with cognitive challenges navigate digital content with fewer steps and less confusion.

- Personalized UI adaptations. AI dynamically adjusts interface elements — such as font size, color contrast, and spacing — based on user behavior and preferences.

These innovations demonstrate how enhancing inclusivity through technology is no longer an ideal — it’s an active, scalable solution powered by AI. As we continue to see rapid development in this space, it becomes increasingly important for creators, developers, and decision-makers to adopt inclusive practices as a default, not an afterthought.

How AI Benefits People With Disabilities

Artificial Intelligence is redefining what digital inclusion means. Instead of simply accommodating users with disabilities, AI now actively enhances how they engage with content, services, and communication. These benefits are not theoretical — they’re practical, measurable, and deeply empowering.

Here’s a breakdown of how AI delivers specific value across four major types of disabilities:

Visual impairments 👁

AI enhances independence for blind and low-vision users by translating visual content into understandable alternatives:

- Real-time image descriptions. AI can analyze images on websites and describe them through audio or text, giving users access to visuals they cannot see directly.

- Text-to-speech functionality. Written content on webpages, apps, or documents is converted into audio that users can listen to on demand.

- Object and face recognition. Machine learning identifies people, places, or items, offering useful cues in both digital and real-world interactions.

- Voice-assisted navigation. AI-powered tools can guide users through digital layouts or physical spaces using verbal directions and contextual feedback.

Together, these features allow visually impaired users to interpret their surroundings and engage online without relying entirely on third-party support or rigid tools.

Auditory impairments 🦻

For those with hearing loss, AI technologies help convert sound into accessible formats to facilitate seamless interaction:

- Live speech transcription. Conversations, meetings, or videos are transcribed in real time, letting users follow along without needing to lip-read or rely on others.

- Automatic video captioning. AI generates on-screen text for video and audio content, supporting accessibility across educational, professional, and entertainment platforms.

- Sign language interpretation. Some AI tools convert sign language into text or spoken words, and vice versa, enabling bidirectional communication in real-time settings.

- Sound-to-visual alerts. Environmental sounds like alarms or knocks are translated into on-screen or tactile notifications for increased awareness.

By transforming spoken and environmental audio into readable and visible outputs, AI allows users to participate fully in social, educational, and professional interactions.

Motor impairments ♿

AI allows users with mobility limitations to interact with websites and applications through alternative input methods:

- Voice-driven navigation. Users can perform searches, click buttons, and type messages by speaking commands, eliminating the need for physical interaction.

- Eye-tracking control. Specialized tools use eye movement to control the cursor, enabling users to click, scroll, and navigate with precision.

- Facial gesture commands. Head nods or facial movements are interpreted by AI to execute common digital actions like clicking or switching tabs.

- Automated task execution. AI automates repetitive actions, reducing effort for users who may find frequent gestures painful or impossible.

With these capabilities, users can engage in digital activities independently — whether filling out forms, shopping online, or attending virtual events.

Cognitive impairments 🧠

AI also offers personalized support for users with cognitive challenges, helping to reduce complexity and support focus:

- Streamlined interface presentation. AI can hide unnecessary content, reduce visual distractions, and create clean layouts for better comprehension.

- Step-by-step guidance. Tools can break complex workflows into smaller, manageable tasks, reducing confusion and increasing completion rates.

- Smart reminders and prompts. AI generates timely nudges or alerts that support memory and assist with scheduling or task management.

- Personalized content suggestions. AI learns from past behavior to surface relevant content while avoiding information overload.

These tools allow users with ADHD, autism, or memory impairments to better manage their time, stay organized, and complete tasks with greater ease and autonomy.

In all of these areas, how AI helps people with disabilities comes down to one powerful outcome: improving access to a digital world that often leaves people behind. Through smart, adaptive technology, AI is helping reshape the web into a more inclusive and user-aware space for everyone.

Challenges and Ethical Considerations

While the relationship between AI and accessibility holds remarkable promise, it also introduces a new set of ethical challenges. As these technologies are increasingly integrated into accessibility solutions, designers and developers must carefully consider how they are built, deployed, and maintained.

Tools that are meant to help can unintentionally cause harm if they’re not developed with ethical foresight. From bias in machine learning to privacy risks in data processing, the very systems designed to enhance inclusion can end up reinforcing inequality.

Below is a comparison of common risks and the corresponding ethical practices that help mitigate them:

| Ethical Challenge | Responsible Practice |

|---|---|

| Algorithmic bias: AI models may misrepresent or exclude people with disabilities due to flawed training data. | Inclusive dataset training: Use diverse, representative datasets that reflect a wide spectrum of disability experiences and backgrounds. |

| Privacy violations: Sensitive data such as speech, biometrics, or health info may be collected without informed consent. | Privacy-first architecture: Implement data minimization, anonymization, and local processing wherever possible to protect user privacy. |

| Over-reliance on automation: Fully automated tools can overlook individual needs or lack options for user control. | Transparency and user control: Allow users to understand, adjust, or disable automated features to regain control of their experience. |

| One-size-fits-all design: Solutions that assume uniform needs may serve only a narrow user group, leaving others behind. | Collaborative design practices: Involve people with disabilities in every stage of development—from ideation to testing. |

These comparisons make it clear: accessible AI must be developed with caution and empathy. It’s not enough for tools to function — they must do so fairly, transparently, and with respect for user dignity.

Where AI and Accessibility Are Working Together

The power of accessibility-focused AI is best seen in the real world. Across industries — from healthcare to education and public services — organizations are already proving how AI can be integrated to improve everyday experiences for people with disabilities. These practical examples show that accessible innovation is not just possible — it’s already happening.

🏥 Healthcare

In healthcare, AI is transforming how support is delivered to patients with disabilities. Tools like predictive communication systems help non-verbal individuals express symptoms or needs by interpreting gestures, images, or limited inputs. AI is also used in hospitals and assisted living environments to monitor for abnormal movement patterns or signs of distress, triggering alerts that can prevent accidents or emergencies.

🎓 Education

Educational institutions are using AI to make learning environments more adaptive and accessible. Students with auditory impairments benefit from AI-powered captioning during live lectures, while those with learning differences like dyslexia use reading assistants that convert complex language into simpler formats or audio.

💼 Public services

AI is also shaping how people access essential services and support systems. On government websites and transportation hubs, chatbots and kiosks are now equipped with accessible features like voice control, simplified menus, and customizable response speeds. These changes allow users with motor, cognitive, or sensory challenges to interact with systems that were previously difficult or unusable.

These implementation examples reveal how accessibility and AI are evolving together. The more industries embrace inclusive innovation, the more we move toward a digital ecosystem where independence, dignity, and equal opportunity are not just ideals — but built-in realities.

How to Make Your Website Accessibility Compliant

With Elfsight’s accessibility widget, making your website align with ADA or EAA regulations is both fast and hassle-free. This no-code tool simplifies the process of building an accessible interface, offering ready-made user profiles and built-in compliance analysis to help you meet essential legal standards while improving usability for everyone who visits.

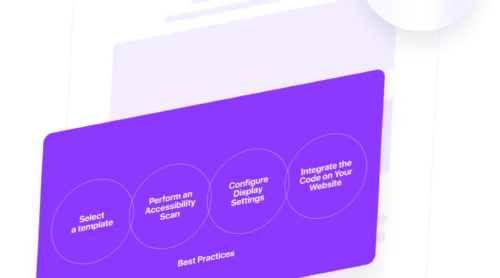

Here’s how to get your accessibility solution up and running with Elfsight:

- Pick a Template. Open the Elfsight editor and pick a widget template. Click “Continue with this template” to begin customizing the widget to suit your needs.

- Check Accessibility Status. Enter your website link into the audit tool and hit “Check”. The built-in scanning tool will review your website and provide a detailed report highlighting areas that need improvement.

- Set Behavior and Design Preferences. Within the “Settings” tab, you can define the widget language, reposition the button, manage how long user preferences are saved, and apply custom CSS or JS if you need advanced styling or behavior.

- Embed the Widget on Your Website. Click “Add to website for free” to generate your installation code. Paste this code into your website’s backend before the </body> tag. Save and publish your changes to activate the widget across your entire platform.

Once deployed, the widget provides a seamless way to comply with EAA and ADA requirements while ensuring a more accessible and user-friendly environment for all visitors.

See the widget in action — build your own in minutes!

AI Accessibility Tools Examples

Not all assistive tools are created equal — some rely on simple automation, while others are built on sophisticated artificial intelligence. In this section, we’ll focus on accessibility AI tools that make direct use of machine learning, computer vision, and speech recognition to create smarter, more adaptive experiences for people with disabilities.

Below is a curated list of tools that either use AI as their core functionality or integrate AI-based components meaningfully. These tools reflect real progress in assistive technology powered by machine learning and real-time language captioning tools that go beyond traditional static solutions:

| Tool | Function | Primary Use Case |

|---|---|---|

| Seeing AI | Uses advanced computer vision to narrate the user’s surroundings — reading text, describing people, identifying objects, and recognizing currency. | Blind or low-vision users needing live interpretation of visual content. |

| Google Live Transcribe | Employs real-time AI speech recognition to convert spoken words into on-screen text across dozens of languages and dialects. | Deaf or hard-of-hearing users who need accurate conversation transcription. |

| Otter.ai | Delivers live transcription, speaker identification, and searchable AI-generated summaries of meetings and lectures. | Professionals and students seeking accessible, searchable notes. |

| Be My Eyes + AI Virtual Volunteer | Pairs human volunteers with an AI assistant (powered by OpenAI) to provide blind users with real-time visual interpretation. | Blind individuals seeking visual assistance without needing to rely on another person 24/7. |

| Scribely AI | Uses natural language generation to create context-aware alt text for images at scale, ensuring WCAG-compliant visual accessibility. | Developers or publishers managing media-heavy websites needing automated image descriptions. |

| Aira | Combines wearable tech with live agents and AI vision tools to help blind and low-vision users navigate environments. | Mobility support in unfamiliar settings like airports, offices, or streets. |

These tools highlight the growing capabilities of AI to deliver personalized, adaptive assistance across multiple accessibility needs. Unlike static or rules-based alternatives, AI-powered tools can interpret, respond, and evolve alongside the user — making them far more scalable and effective over time.

As AI technology matures, we can expect these tools to become even more intuitive and widely available. From educational institutions to personal devices and corporate websites, the future of accessibility will increasingly rely on intelligent tools that adjust to user needs rather than requiring users to adapt to digital limitations.

Conclusion

The evolution of AI for accessibility is more than a technological trend — it’s a movement toward creating a digital world where no one is left behind. From intelligent captioning tools and adaptive learning platforms to vision-assisting apps and voice-controlled interfaces, AI is proving its ability to bridge long-standing gaps in usability, independence, and digital equality.

With the right focus, AI has the power to deliver digital empowerment for all — not just by offering access, but by offering agency. The challenge now is to ensure that accessibility remains central to AI’s growth, driving innovation that elevates everyone equally.