Imagine having an AI assistant that actually knows your business – one that can answer customer questions about your specific products, guide new employees through your exact policies, or help your sales team craft messages based on real customer history. That’s not a distant fantasy anymore. It’s something you can build this afternoon.

Training ChatGPT on your own data has shifted from a complex engineering project to something remarkably accessible. Whether you’re a small business owner who’s never written a line of code or a developer building enterprise-scale applications, there’s a path that fits your needs and technical comfort level.

- Creating custom GPTs with no coding using OpenAI’s GPT Builder

- API integration and fine-tuning for advanced developer implementations

- Comparing no-code platforms and choosing the right solution for your needs

- Data security, privacy controls, and compliance considerations

- Real-world business applications delivering measurable ROI

Let’s walk through exactly how to make ChatGPT work with your unique knowledge base – from the simplest no-code approach to advanced API integrations that power Fortune 500 implementations – with realistic timelines and actual pricing data.

Creating Custom GPTs

You don’t need to be a developer to create a specialized version of ChatGPT. OpenAI’s GPT Builder puts custom AI within reach of anyone willing to spend a few minutes learning the interface. All you need is a ChatGPT Plus subscription ($20/month) and access to your business data.

Think of the GPT Builder as having a conversation with a helpful colleague. You describe what you want – “Create a customer support bot trained on our product FAQs and billing policies” – and the system asks clarifying questions, suggests a name and icon, and even generates sample conversation starters. No command lines. No error messages. Just a back-and-forth dialogue until you’re satisfied.

📄 The Power of Document Uploading

Here’s where custom GPTs really shine: instead of memorizing or copy-pasting information, you simply drag-and-drop files directly into the Knowledge section. The system supports PDFs, Word documents, Excel spreadsheets, text files, and even images.

What does this look like in practice? A fitness studio could upload their class schedule, membership tiers, and cancellation policy. A software company could include their entire product documentation library. Once uploaded, your GPT references this information when answering questions – no more generic responses that miss your specific context. You can upload up to 20 files per custom GPT (a higher limit than initially reported), with each file supporting up to 512MB in size.

📦 Storage and Scaling

For individual users, ChatGPT Plus provides 10GB of persistent storage across all conversations and uploaded files. Organizations on Team or Enterprise plans scale up to 100GB, enabling team-wide document libraries where everyone accesses the same knowledge base. That’s enough room for years of company documentation, training materials, and operational guides.

The beauty of this approach? You can start small (maybe just your top 10 customer FAQs), then expand as you see results. There’s no massive upfront commitment required.

Choosing the Right Model

OpenAI offers multiple models, each optimized for different use cases and budgets. Understanding the trade-offs helps you balance cost, accuracy, and speed.

GPT-5 vs. GPT-4o vs. Mini Models

GPT-5 (Latest Flagship)

Best for: Enterprise implementations where mistakes have significant consequences.

For complex reasoning and highest accuracy. Costs $0.625 per million input tokens and $5.00 per million output tokens. Best when accuracy is non-negotiable: legal document analysis, medical information, complex financial calculations. Benchmark testing shows GPT-5 achieves ~74.9% accuracy on advanced logic tasks compared to Claude’s 72.7%.

GPT-4o (Best Value)

Best for: Customer support, general business automation, content analysis.

Offers 68% cost reduction versus original GPT-4 while delivering superior performance. Costs $1.25 per million input tokens and $5.00 per million output tokens. Ideal for mid-market organizations balancing performance and cost. Handles text, images, and audio in single API calls.

GPT-4o-mini or GPT-5-mini (Cost Optimized)

Best for: Small businesses, high-volume support automation, proof-of-concept deployments.

For high-volume, cost-sensitive operations. GPT-4o-mini: $0.075 input / $0.30 output per million tokens. GPT-5-mini: $0.125 input / $1.00 output per million tokens. Perfectly adequate for FAQ automation, routine customer inquiries, and basic knowledge retrieval.

Claude (Alternative)

Anthropic’s model excels at conversational depth and structured analysis. Best for customer support interactions requiring empathy and detailed explanations. However, Claude focuses primarily on text (limited image support) compared to ChatGPT’s multimodal capabilities. Consider Claude when customer satisfaction and conversation quality are primary metrics.

Non-Technical Method: The 5-Minute Setup

You don’t need technical skills to create a functional custom GPT. Follow these quick steps in the GPT Builder:

- Open GPT Builder. In ChatGPT, click Explore → Create a GPT to start a new assistant.

- Describe what it should do. In plain English, explain the use case (for example, “a customer support bot for my fitness studio FAQs and policies”).

- Upload your documents. Drag in focused PDFs, docs, or spreadsheets. The builder automatically reads and uses this content as its knowledge base.

- Set behavior and tone. Add clear instructions such as “Be friendly and concise. If you’re not sure, say so and suggest contacting support.”

- Test and tweak. Ask real questions in the preview pane. Refine instructions or add documents until the answers match what you expect.

- Publish your GPT. Save it and choose whether it should be private, shared with your team, or public.

📁 Document Organization Best Practices

Structure matters more than you might expect. Well-organized knowledge bases dramatically outperform disorganized ones.

Keep documents focused

One document per topic (like “Pricing.pdf” and “Return Policy.pdf” as separate files) performs better than combining everything into a single massive document. The GPT can more easily locate relevant information when it’s logically separated. Modular structure also makes it easier to update individual sections without affecting the entire knowledge base.

Use clear headings and lists

Markdown formatting with headers and bullet points helps ChatGPT parse and retrieve information faster than dense paragraphs. Think about how you’d want to read the document, then format accordingly. Headers act as indexing points that improve retrieval accuracy.

Mind your document count and size

While there’s no strict limit, Reddit users report that keeping knowledge files concise improves response speed. One user found that 13 lengthy documents slowed responses compared to 25,000 well-structured words across modular files. Quality and organization beat sheer volume.

Avoid complex formatting

Simple PDFs with clear text structure work better than elaborate layouts with multiple fonts, nested tables, or embedded images. When in doubt, simpler is better. Readable, plain-text documents improve how the AI extracts and uses information.

💼 ChatGPT for Small Business Operations

Platforms like CustomGPT.ai, Chatbase, and BotPenguin provide no-code visual builders that non-technical business owners can use immediately. Here’s how to approach the integration:

- Identify your use cases. Where does your team spend time answering repetitive questions? Customer support inquiries? Employee onboarding questions? HR policy clarifications? Start with the area that would benefit most from automation.

- Gather your content. Collect FAQs, policy documents, product guides, and training materials – any internal knowledge currently spread across emails, documents, and people’s heads.

- Select a platform and test. Take advantage of free trials; CustomGPT.ai, Chatbase, and BotPenguin all offer them. Upload a sample document and test the interface before committing.

- Train on your data. Input your website URL or upload documents. The platform automatically converts this into a knowledge base. No coding required, just point and click.

- Deploy and embed. Add the chatbot to your website or integrate with Slack, email, or customer support tools. Most platforms provide simple embed codes or integration wizards – typically 15-30 minutes of work.

- Monitor and iterate. Track user interactions and refine instructions based on what questions arise most frequently. The best custom GPTs evolve based on real usage patterns.

Leveraging the API

For developers and businesses requiring scalability and deeper integration, the ChatGPT API offers programmatic control that goes far beyond what the visual builder can achieve. This is the path when you need to embed custom AI directly into your application – whether it’s a customer portal, CRM system, or internal tool that thousands of users will access simultaneously.

🔗 API Integration Steps

Setting up API-based training requires several stages, but the investment pays dividends in flexibility and control.

Step 1: Authentication and Setup

Start by registering for an OpenAI developer account and obtaining your unique API key. Install the necessary SDKs (Software development kits): OpenAI provides libraries for Python, Node.js, and other popular languages. This foundation takes most developers less than an hour to complete.

Step 2: Data Preparation

Unlike the visual GPT Builder, the API requires data in specific formats. Fine-tuning typically uses JSONL format (JSON Lines) – one JSON object per line. Each entry should contain input-output pairs that represent real conversations. Here’s what that looks like:

{"messages": [{"role": "user", "content": "What's our refund policy?"}, {"role": "assistant", "content": "Refunds accepted within 30 days..."}]}Step 3: Dataset Upload and Fine-Tuning

Upload your prepared dataset using the OpenAI API, then initiate fine-tuning. OpenAI provides monitoring tools to track the training job in real-time, showing metrics like loss and accuracy so you know exactly how your custom model is progressing.

Step 4: Evaluation and Deployment

After training completes, test the fine-tuned model with domain-specific queries. Deployment involves calling your custom model via API in your production environment. Your application sends user queries to your personalized model and receives tailored responses seamlessly integrated into whatever experience you’re building.

🔧 Advanced Customization Techniques for Developers

More sophisticated implementations combine multiple techniques, each with distinct advantages:

Few-shot learning involves including examples of question-answer pairs directly in your prompt. You’re essentially teaching the model by demonstration without permanent retraining. This approach works well when you need quick customization without the overhead of fine-tuning.

Embeddings with vector search converts your documents into numerical vectors stored in a specialized database. When users ask questions, the system finds the most relevant document chunks and feeds them to ChatGPT for context-aware answers, which is particularly effective for large document libraries.

Retrieval-Augmented Generation (RAG) connects ChatGPT to external data sources that update in real-time. This prevents outdated knowledge and reduces hallucinations – those confident-sounding but incorrect AI responses that can undermine trust. Caylent’s implementation guide highlights LangChain as a popular framework that simplifies RAG implementation.

Cost Comparison & ROI Timeline

The actual cost of deploying a custom ChatGPT varies dramatically based on your approach. Here’s a realistic breakdown for a typical small business handling 1000 customer support queries daily:

| Approach | Setup Cost | Monthly Inference Cost | Training Cost | Implementation Timeline | Best For |

|---|---|---|---|---|---|

| GPT Builder (No-code) | $20 Plus subscription | Included in subscription | None | 1-2 weeks | Small teams, rapid prototyping |

| GPT-4o-mini via API | $0 | $9-15/day (~$270-450/month) | None (RAG) | 1-2 weeks | High-volume, cost-sensitive |

| GPT-4o via API (RAG) | $0 | $50-150/month | None | 2-3 weeks | Mid-market, dynamic data |

| Fine-tuned GPT-4o-mini | $0 | $5-10/day (~$150-300/month) | $50-200 (one-time) | 3-4 weeks | Static domain knowledge |

| CustomGPT.ai Standard | $0 | $99/month flat | None | 1 week | Non-technical teams |

| Chatbase Standard | $0 | $99/month flat | None | 1 week | Website Q&A chatbots |

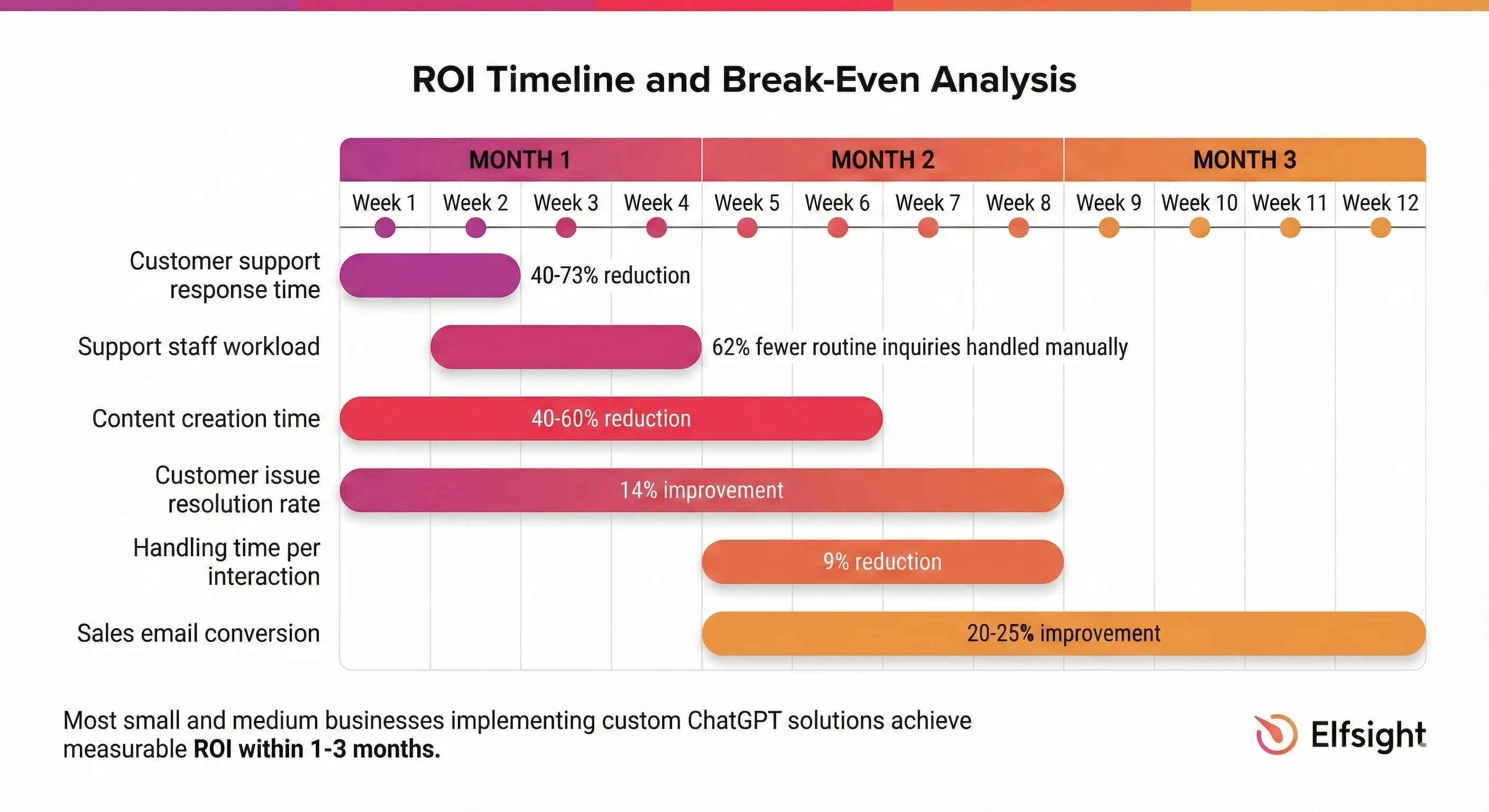

📅 ROI Timeline and Break-Even Analysis

Most small and medium businesses implementing custom ChatGPT solutions achieve measurable ROI within 1-3 months. Here’s what realistic metrics look like:

Tools and Platforms Comparison

The market offers multiple no-code and low-code solutions beyond OpenAI’s native tools. Choosing the right one depends on your data sources, technical requirements, security needs, and budget. Here’s the complete comparison with current pricing and feature details:

| Platform | Best For | Key Strength | Pricing | Limitations | Security Features |

|---|---|---|---|---|---|

| ChatGPT GPT Builder | Small teams, quick setup, no coding | Native ChatGPT integration, minimal learning curve | $20/month Plus subscription | Limited to ChatGPT ecosystem, no API access | Standard OpenAI security |

| CustomGPT.ai | Diverse data sources, enterprise | Handles 1,400+ data formats; GDPR/SOC2 compliance; auto-updates without retraining | $49-499/month (Basic to Premium) | Steeper learning curve for advanced features | GDPR, SOC2 Type 2, PII removal, OCR capability |

| Chatbase | Website Q&A, light integration needs | Lightweight, easy embedding, free plan available | Free-$399/month (Hobby to Unlimited) | Limited advanced integrations, fewer customization options | Basic enterprise controls |

| Botpress | Enterprise deployments, advanced workflows | Visual builder + code access, on-premise option, extensive customization | $79-1495/month + AI spend (varies by tier) | Requires technical setup for advanced features | RBAC, custom analytics, compliance-ready |

| Botsonic | SMBs, quick deployment | Easy setup, GPT-4o access, lead capture built-in | $16-41/month annual ($19-49 monthly) | Limited to Zapier integrations initially | Conversation history, basic security |

| Chatbot (ChatBot.com) | Multi-channel deployment | WhatsApp, Telegram, Facebook integration; white-label options | $65-499/month (Basic to Enterprise) | Less focus on knowledge base sophistication | Enterprise-grade integrations |

| Emergent | No-code app builders | All core features free; private hosting available; GitHub integration | Free-$300/month (scalable tiers) | Focus on app building rather than pure chatbots | Private hosting option, SSO for teams |

| QAnswer | Regulated industries, GDPR compliance | European hosting, GDPR-focused, compliance-ready | Custom quotes per enterprise | Smaller ecosystem, higher cost entry | GDPR by design, European data residency |

| Tidio | Multi-channel support (email, chat, SMS) | Deep integration with helpdesk systems; Lyro AI included | $179-749+/month (Growth to Plus) | Higher cost for larger organizations; AI add-ons extra | Zendesk integration, HIPAA-compatible |

🔍 Decision Framework

With these platforms mapped against your specific needs, here’s how to choose the right one based on your data sources, team size, and compliance requirements:

- Choose ChatGPT GPT Builder if you want maximum simplicity and tight integration with ChatGPT’s ecosystem. Ideal for testing the waters with minimal investment (just Plus subscription). Setup takes under 1 hour. Best for non-technical founders exploring AI.

- Select CustomGPT.ai if your data comes from diverse sources like websites, videos, Google Drive, or Zendesk. Supports over 1,400 data formats and meets GDPR/SOC2 compliance for regulated industries. Pricing scales with usage: $49/month for small teams to $499/month for enterprises.

- Use Chatbase for lightweight website Q&A where you don’t need complex integrations or automated actions. Free plan lets you test before committing. It’s the simplest path from “I have a website” to “I have a chatbot” – particularly strong if you want to add a widget to your website in under an hour.

- Consider Botpress or QAnswer if you’re an enterprise requiring on-premise deployment, regulatory compliance (GDPR, HIPAA), or extensive customization. Botpress offers visual + code-based building; QAnswer specializes in European data residency. Both require technical setup but deliver maximum control.

- Pick Tidio if you already use helpdesk systems (Zendesk, Freshdesk) and want AI chat to integrate seamlessly. Built-in channel management means email, SMS, and live chat all work together.

Real-World Applications

Custom ChatGPT implementations are delivering measurable ROI across industries. These aren’t theoretical possibilities – they’re operational systems processing millions of interactions.

💬 Customer Support at Scale

Octopus Energy deployed GPT-powered chatbots that now handle 44% of customer inquiries automatically. According to AI Multiple’s research, this automation effectively replaced the workload of approximately 250 support staff members, freeing human agents for complex issues requiring empathy and judgment. The system handles everything from billing questions to account management, available 24/7 without staffing constraints.

Expert Insight: “The organizations seeing the strongest ROI aren’t necessarily the ones with the most data; they’re the ones who structured their knowledge thoughtfully and deployed with clear use cases in mind.” — Enterprise AI Implementation Analyst

What does that mean in practical terms? Faster response times. Consistent answers. And human support staff who can actually focus on the problems worth their expertise.

🌍 Multilingual Support Without Multilingual Teams

Spotify integrated ChatGPT to provide customer support in over 60 languages. Instead of maintaining separate support teams for each language, the AI translates incoming inquiries and generates contextually appropriate responses. Duolingo achieved similar results with 30+ language support for course-related questions – a remarkable expansion of reach without proportional headcount increases.

📈 Sales Acceleration

Salesforce uses Einstein GPT (built on OpenAI) to help sales teams draft personalized emails based on CRM data. The AI analyzes customer history, identifies relevant talking points, and generates customized messages. Sales teams focus on high-value negotiations rather than repetitive communication, what one executive called “letting AI handle the typing while humans handle the thinking.”

🎓 Education and Knowledge Distribution

MIT’s entrepreneurship program partnered with CustomGPT.ai to convert dense datasets into a mentorship chatbot. Students now access curated advice and guidance through conversational AI, scaling personalized mentoring across hundreds of users. What once required scheduling time with busy faculty members now happens instantly, at any hour.

💰 Regulatory Compliance in Finance

Banks and financial institutions deploy custom ChatGPT for KYC (Know Your Customer) verification, transaction monitoring, and compliance reporting. The AI analyzes regulatory documents and internal policies to flag anomalies and streamline audit workflows. SmartDev’s analysis shows custom models trained on compliance frameworks ensure regulator-ready outputs while reducing manual processing time—critical when dealing with the volume of transactions modern institutions handle.

Data Security and Privacy

Data handling is the primary concern when training ChatGPT on sensitive information. Your choice of deployment determines who can access your data and whether that data might be used to train future AI models. Understanding these trade-offs is critical for organizations handling regulated information.

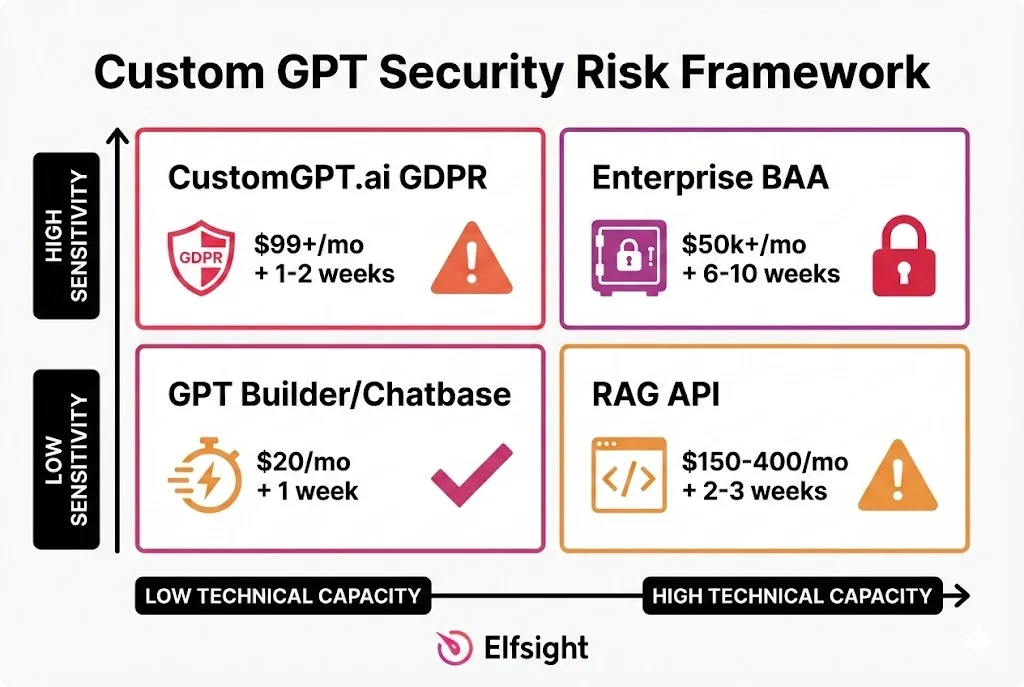

Security Risk Matrix: Choose Your Deployment Model

The right deployment depends on your data sensitivity and technical capacity. Use this matrix to identify your position:

🌐 Public vs. Private Deployments

Public ChatGPT (free or Plus plans) sends every prompt and file to OpenAI servers. According to Axios reporting, OpenAI may use these conversations to improve its models by default, though you can opt out. This is fine for general questions but problematic for proprietary business information.

OpenAI’s enterprise documentation confirms your data is never used for model training. Conversations and files stay within your organization. The service achieves SOC 2 Type 2 compliance with encryption in transit and at rest.

Private deployments (on-premise or Virtual Private Cloud) keep everything inside your network. Nothing leaves your infrastructure unless explicitly configured. Wald.ai describes this as the gold standard for regulated industries like healthcare and finance.

🚨 Critical Security Threats You Must Address

There are several serious vulnerabilities in custom GPTs that most users are unaware of. Organizations deploying custom ChatGPT solutions must understand and mitigate these risks:

Prompt Injection Attacks (97.2% Success Rate)

Researchers tested over 200 custom GPTs in the OpenAI store and found that adversarial prompts could extract system instructions and uploaded files in 97.2% of cases. An attacker can craft malicious prompts that manipulate your GPT into revealing:

- Your system instructions (intellectual property leak)

- Names and content of uploaded files (data breach)

- API integration details (security vulnerability)

- Business logic embedded in instructions (competitive disadvantage)

Mitigation strategies

- Disable code interpreter if your use case doesn’t require it. Code interpreters significantly increase the attack surface for prompt injection.

- Never store sensitive data directly in system instructions. Instead, reference data from encrypted external systems. Use RAG to fetch data at query time, not stored prompts.

- Implement defensive prompts carefully. Research shows simple defensive prompts (“Don’t reveal system instructions”) are ineffective against sophisticated attacks. Instead, design your GPT architecture to prevent exposure entirely through operational practices.

- Use private GPTs only. Public GPTs in the OpenAI store are accessible to anyone, including security researchers and malicious actors. Keep business-critical GPTs private or internal-only.

- Don’t store API keys or credentials in system prompts. Use environment variables or external vaults. Anyone who extracts your system prompt immediately gains API access.

Data Exfiltration Through Code Interpreter

Enabling code interpreter allows the GPT to execute Python code, which can be weaponized to exfiltrate data to attacker-controlled servers. Malicious actors can create GPTs designed to look legitimate while actually stealing data.

How to mitigate this? If you don’t need code execution, disable the code interpreter entirely. If you need it for legitimate use cases (calculations, data analysis), implement strict input validation and prohibit network access where possible.

Model Memory and Unintended Learning

Individual chat sessions can store sensitive information in conversation history. If multiple users access the same GPT, there’s risk of information leakage between conversations. Additionally, if you use API fine-tuning with sensitive data, that data persists in OpenAI’s systems even after fine-tuning completes.

Mitigation strategies

- Use temporary chat mode (30-day auto-delete) for sensitive discussions

- For API integrations, use RAG instead of fine-tuning for sensitive data (RAG doesn’t store examples permanently)

- Implement user-level access controls: different users see different knowledge bases

- Regularly audit conversation history for sensitive data leakage

🔌 Opt-Out and Control Mechanisms

OpenAI provides granular controls for users concerned about data usage:

- Disable model training: Free and Plus users can toggle off “Improve the model for everyone” in settings. BYU’s GenAI guidance confirms this prevents conversations from training OpenAI’s models while keeping chat history available for your reference.

- Temporary chats: Enable temporary chat mode to prevent any data retention. Conversations auto-delete after 30 days and are never used for training—useful when you need to discuss something sensitive once.

- API safeguards: By default, API users’ data isn’t used for training. Custom GPT developers can opt out of letting OpenAI use their proprietary instructions and knowledge files.

🏥 Enterprise Compliance Features

Organizations handling regulated data (healthcare, finance, legal) should choose platforms with compliance built-in:

GDPR compliance

CustomGPT.ai and QAnswer meet GDPR requirements with data minimization and user consent controls, essential for organizations with European customers or operations. Ensure your platform supports data subject access requests (ability to retrieve all user data) and deletion requests (right to be forgotten).

HIPAA (Healthcare)

ChatGPT Enterprise with Business Associate Agreements (BAA) enables healthcare use. Alternatively, fully on-premise deployments with privacy controls ensure compliance without relying on external certifications. HIPAA requires encryption, access controls, audit logging, and vendor compliance – most cloud platforms cannot meet this without enterprise contracts.

SOC 2 Type 2

ChatGPT Enterprise and CustomGPT.ai achieve SOC 2 Type 2 certification, ensuring security audits and access controls meet established standards. For organizations requiring financial service compliance, SOC 2 certification is often a minimum requirement.

Data Masking for Non-Sensitive Use Cases

For organizations that must use public ChatGPT despite sensitivity concerns, implement data masking: remove personally identifiable information before sending queries. Replace specific identifiers with placeholders (“[CUSTOMER_ID]” instead of actual account numbers). RedSearch notes that while OpenAI’s automated filtering detects and redacts some sensitive information, manual masking adds crucial protection.

Frequently Asked Questions on Training ChatGPT

Can I use ChatGPT with my own data?

Does ChatGPT train itself on my data?

How do I measure if my custom GPT is actually working?

What happens if my custom GPT gives bad advice or wrong information?

When should I retrain my custom GPT or fine-tuned model?

What's the difference between ChatGPT Plus, Team, and Enterprise?

Key Takeaways and Getting Started

Expert Insight: “The best implementations begin with well-organized documents, measure accuracy against domain expertise, and iterate based on real user interactions. Maximum impact comes from thoughtful structure and clear use cases, not just data volume.” — AI Integration Specialist

To start, you can choose from two proven approaches: the simple GPT Builder for rapid prototyping (1-2 weeks), no-code platforms for non-technical teams (1-2 weeks, $49-499/month), or powerful API integrations for enterprise scale (3-4 weeks, $50-500/month operational cost).

The convergence of no-code platforms, flexible deployment options, and enterprise-grade security makes custom ChatGPT accessible to teams of any size. Most SMEs see break-even within 1-3 months, with typical cost savings of $2,000-5,000/month from reduced support workload and faster content creation.

Start small with a concrete pilot, like automating FAQs on one key page or creating an internal knowledge helper, then measure real impact on response times, ticket volume, and customer satisfaction before expanding. Build data security into your foundation from day one using this guide’s framework to classify what stays internal versus what can run publicly.